Curse your valentine with these candy hearts

When I’ve generated candy heart messages before, they’ve been text only - definitely some assembly required. But methods of generating custom images from descriptions are getting better all the time. Could I use modern machine learning to generate new candy hearts?

The answer is no.

For the image above, I gave the prompt “A candy heart that says Be Mine” to a program I’ve used to make AI-generated images before. Ryan Murdock’s Big Sleep program uses OpenAI’s CLIP algorithm to judge how well one of BigGAN’s generated images matches my caption, and to try to direct the generated BigGAN images toward a closer match. This candy heart, only roughly heart-shaped and weirdly fleshy, is the best match it found.

Here’s its attempt at “a candy heart that says Kiss Me”.

It doesn’t seem to necessarily do better if the text I ask for is more likely on a candy heart.

Here’s “A candy heart that says HOLE.”

Disturbingly legible.

I had wondered if I could get CLIP + BigGAN to generate brand-new candy heart messages, but that was even less effective. Here’s “A candy heart with a message”, whose message is apparently desperately crammed onto every millimeter of the candy, so deeply that the sugar begins to split.

The British version, “a conversation heart,” fared no better, but is on a bird for some reason so that’s nice.

I wondered if specifying a shorter message might help it produce something more legible.

Here’s “A candy heart with a two word message.” I don’t know what this rune summons, but it probably has lots of legs.

“A message spelled in three candy hearts” receives only partial credit, depending on whether you think these adequately convey the message “RUN”.

I wondered about some recurring textures I was seeing on CLIP + BigGAN’s candy hearts. Was it a coincidence that many of the “candy hearts” looked weirdly lumpy and fatty and blood-colored? I asked it to find an optimum picture for “a heart”, and this is what it produced.

And here’s what it generated when I asked for “a candy”.

These look so much like its “a candy heart that says Kiss Me” that I wonder if, in an attempt to get extra credit, the AI is searching for images that closely match not only “candy heart” but also “candy” and “heart” in as many simultaneous senses of these words as it can. It generated not only a candy heart, but a cellophane-wrapped heart.

This seems even clearer in its response to “A candy heart that says Stank Love.”

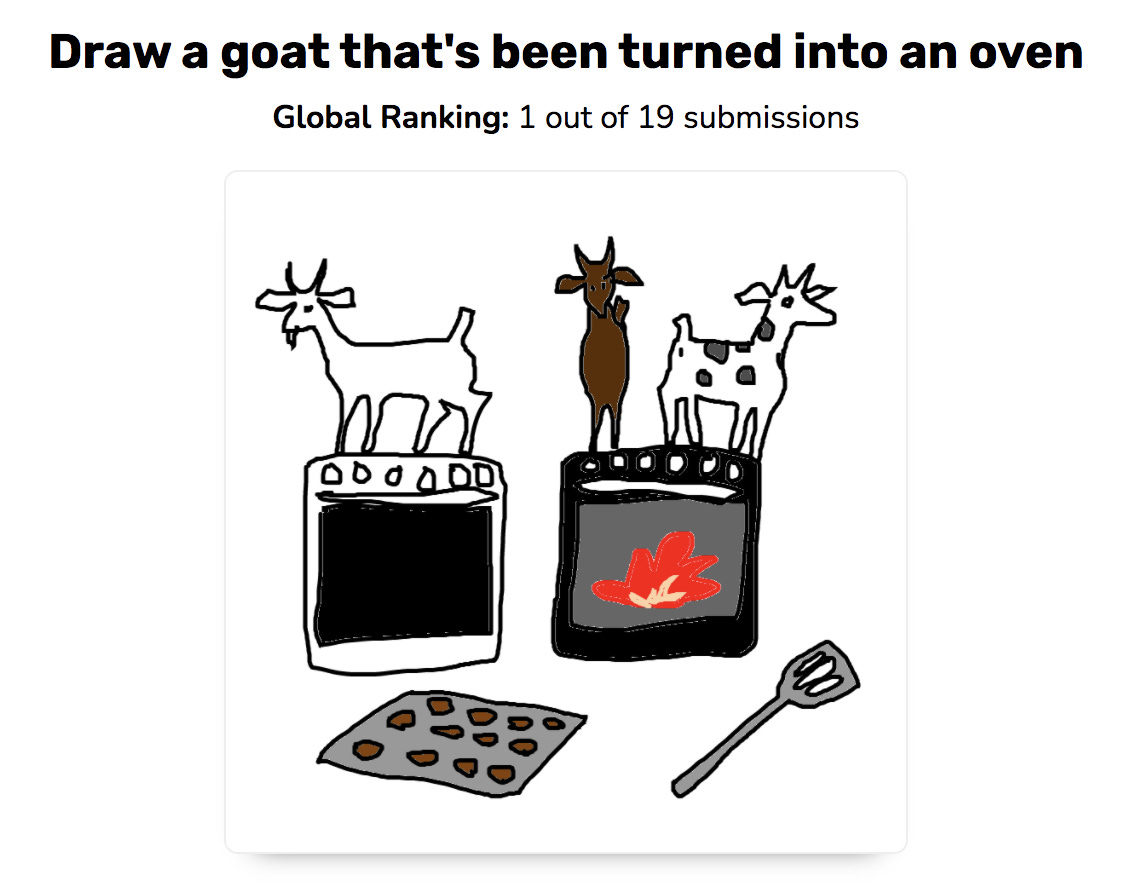

Accuracy is only one route toward having CLIP agree that a particular image matches its caption. Sometimes it’s enough to combine the caption keyword. This seems to maybe work as a strategy on paint.wtf, a game where CLIP ranks your sketches. As of the time of writing, my drawing of “a goat that’s been turned into an oven” is ranked #1, ahead of a bunch of entries that are actual oven-goats. (My entry for “someone who knows how to fly”, a stick figure with big wings in the sky with butterflies and houseflies and a book, didn’t do nearly so well, so who knows.)

So what happens if I simply ask CLIP + BigGAN for “a candy heart”? Does it write a legible message? Or at least combine organs and cellophane wrappers in an interesting way? I regret to inform you that it does this:

I had fun combining candy hearts with a few other prompts (a candy heart from a robot, a candy heart for a giraffe, a candy heart that says LASERS) etc. To read a bonus post with some of these results, become an AI Weirdness supporter! Or become a free subscriber to get new AI Weirdness posts in your inbox.